Always at 23.59 on the same day as your TA session (Except if another deadline has been agreed with the TA).

Consult the deadline for your class on the delivery plan.

The primary learning goal of this iteration is understanding and implementing Broker delegates to make HotStone into a distributed system. A secondary learning goal is reinforced learning of how test doubles can be used to have automated testing of (much of) a distributed system. And third, you will revisit EC testing.

The product goal is to get all methods that are pass-by-value only developed for HotStone.

No kata this week.

HotStone is suited for a Broker based client-server system: The central Game interface is a Facade pattern which serves as the role to split between client and server. On a central server a GameServant keeps track of the game's state, while a number of clients run a HotStone GUI (one for each player) which interacts with the game state via GameClientProxy, CardClientProxy, and HeroClientProxy. However, it is also complex in the sense that the GameServant will create new objects (like new cards) that must be accessible on the client side. And, it uses the Observer pattern for the UI, which our Broker pattern cannot handle, and thus needs special care.

Therefore, the development will be broken into two iterations:

Broker takes some time to understand as there are quite a lot of moving parts involved. Be sure to study my lecture slides "W11-1 Mandatory Intro" carefully, or find the lecture on Panopto/Zoom. It contains detailed guides on how to start the process of solving the exercises.

Download the hotstone-broker-start (ZIP) and merge it carefully into your HotStone project using the process I outline below in the Hints section.

Use TDD and test doubles to develop well tested Broker role implementations of the Game's ClientProxy and Invoker role for pure pass-by-value methods under automated testing control.

Find details in the report template about how to document your work.

Note: There are only a few methods that are purely pass-by-value: getTurnNumber(), getHandSize(who), endTurn(), etc.

As a simplification you should let your server only handle a single game (only a singleton GameServant instance.) In the Broker Starter Code, you will find an initial (failing) test case in hotstone.broker.TestGameBroker, that you can use as starting point.

Use TDD, test doubles, and "FakeSomeOfIt" to develop well tested Broker role implementations of the ClientProxies and Invoker(s) to handle all Card and Hero methods.

Find details in the report template about how to document your work.

Important: Handle the server side method dispatch for Game, Card and Hero methods in a single "blob" Invoker. Otherwise you will run into trouble in the Broker II exercise, find details in the W11-1 slides.

Extend the provided MANUAL test client (target: 'hotstoneStorytest'), and demonstrate distributed execution on 'localhost'.

Find details in the report template about how to document your work.

Test your developed code in exercise 1.1 by letting the manual test client execute a user story ala:

Define a minimal set of test cases for the 'augmentMinion(...)' function defined by the SigmaStone game (FRS § 37.8.2), using the systematic black-box testing technique equivalence class partitioning.

This is a pure theoretical exercise, SigmaStone should not be implemented.

You should assume that preconditions are met so ignore all the situations like 'trying to attack with opponents card, player is not in turn', etc.

Consider the representation property carefully and repartition when you are in doubt. For instance, field support is at first glance just the range 0..4. However, 0 is neutral element and not representative because if all test cases uses field support = 0, then no tests will validate if the method to calculate support is correct or added correctly. Thus, this set must be repartitioned. Next, you may consider if opponent fielded minions of the same class are by mistake added as support.

Deliveries:

Take care with the 'build.gradle' file in the new Zip file, as you have to merge its contents (new dependencies and new tasks) into your existing build file from the MiniDraw exercises. Also ensure to merge the 'gradle.properties' file.

The code templates are in the 'broker' packages and in the src/main/resources folder and should not overwrite any of your existing code.

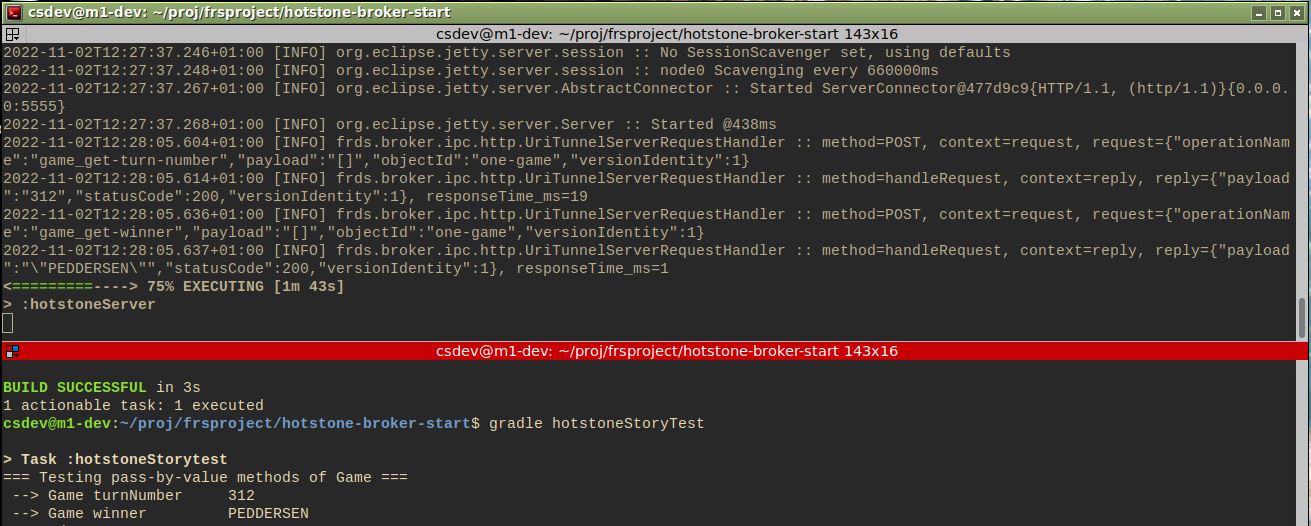

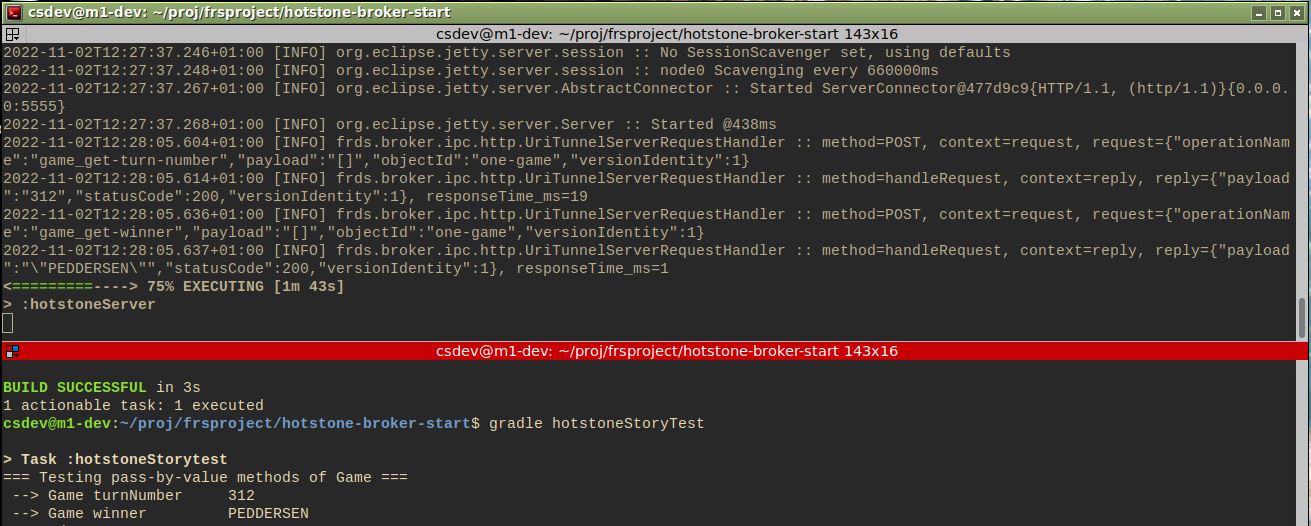

Exercises 1.1 and 1.2 use a FakeObject IPC layer/network layer. The goal of the 1.3 Integration Test exercise is to demonstrate that a real client-server system is 'clean code that works'. As shown in my own solution below, I first start the hotstone server in one shell ('hotstoneServer'); and next run the manual test client program ('hotstoneStoryTest') in a second shell. Thus they run as a real client-server system using the network, even though they communicate over 'localhost' and thus not "very remotely".

Your submission is evaluated against the learning goals and adherence to the submission guidelines. The grading is explained in Grading Guidelines. The TAs will use the Iteration 9 Grade sheet to evaluate your submission.

| Learning Goal | Assessment parameters |

| Submission | Git repository contains merge request associated with "iteration9". Git repository is not public! Integration test targets work ('hotstoneServer' and 'hotstoneStoryTest'); required artefact (report following the template including having good quality screenshots that outline the story) is present. |

| Broker/Game Implementation | The implementations of the Broker delegates for the Game role are functionally correct (allow client-server distribution), respect role responsibilities (is a correct Broker pattern), and the code is clean. The report document process and resulting code correctly. |

| Broker/Card and Hero Implementation | The implementations of the Broker delegates for the Card, and Hero roles are functionally correct (allow client-server distribution), respect role responsibilities (is a correct Broker pattern), and the code is clean. The report document process and resulting code correctly. |

| Test Doubles | Test doubles are used correctly to support automated testing of Broker delegates and are correctly discussed and presented in the report. |

| Integration Test | The integration test correctly runs a distributed story test, and represent a good integration testing of the Game's methods. |

| Systematic Testing | A correct EC partitioning has been made, clearly describing and arguing for the partitioning heuristics used, and documented correctly in an EC table. Coverage and representation properties have been analyzed and discussed. A correct set of test cases has been generated using proper application of Myers heuristics and are documented in a proper extended test case table. |